Corruption Robustness

Corruption Robustness, or statistical robustness, describes the ability of a model to cope with randomly distributed changes in its input data. Unlike adversarial robustness, the changes are not assumed to be worst-case. Corruption robustness is primarily concerned with real-world data errors, such as camera or sensor corruption in image data, but can theoretically be extended to any type or distribution of errors. Corruption robustness evaluation typically involves tests on corrupted benchmark datasets, such as the popular corrupted variants of the image classification datasets CIFAR-C and ImageNet-C.

Figure 1: Examples of corrupted images from CIFAR-C and ImageNet-C datasets, showcasing common real-world noise and distortions.

Research shows that vision models in particular suffer significant performance degradation when confronted with such real-world corruptions. One research question is therefore how to train models that are as robust as possible against all known types of random corruption. Approaches to solving this research question include model architecture and optimisation choices, and, prominently, training data augmentation. Even state-of-the-art training strategies have not yet closed the gap between model performance on clean and corrupted data. Many approaches achieve corruption robustness at the expense of accuracy on clean data. The holy grail of a training strategy is therefore to reliably improve corruption robustness and accuracy together on clean test data.

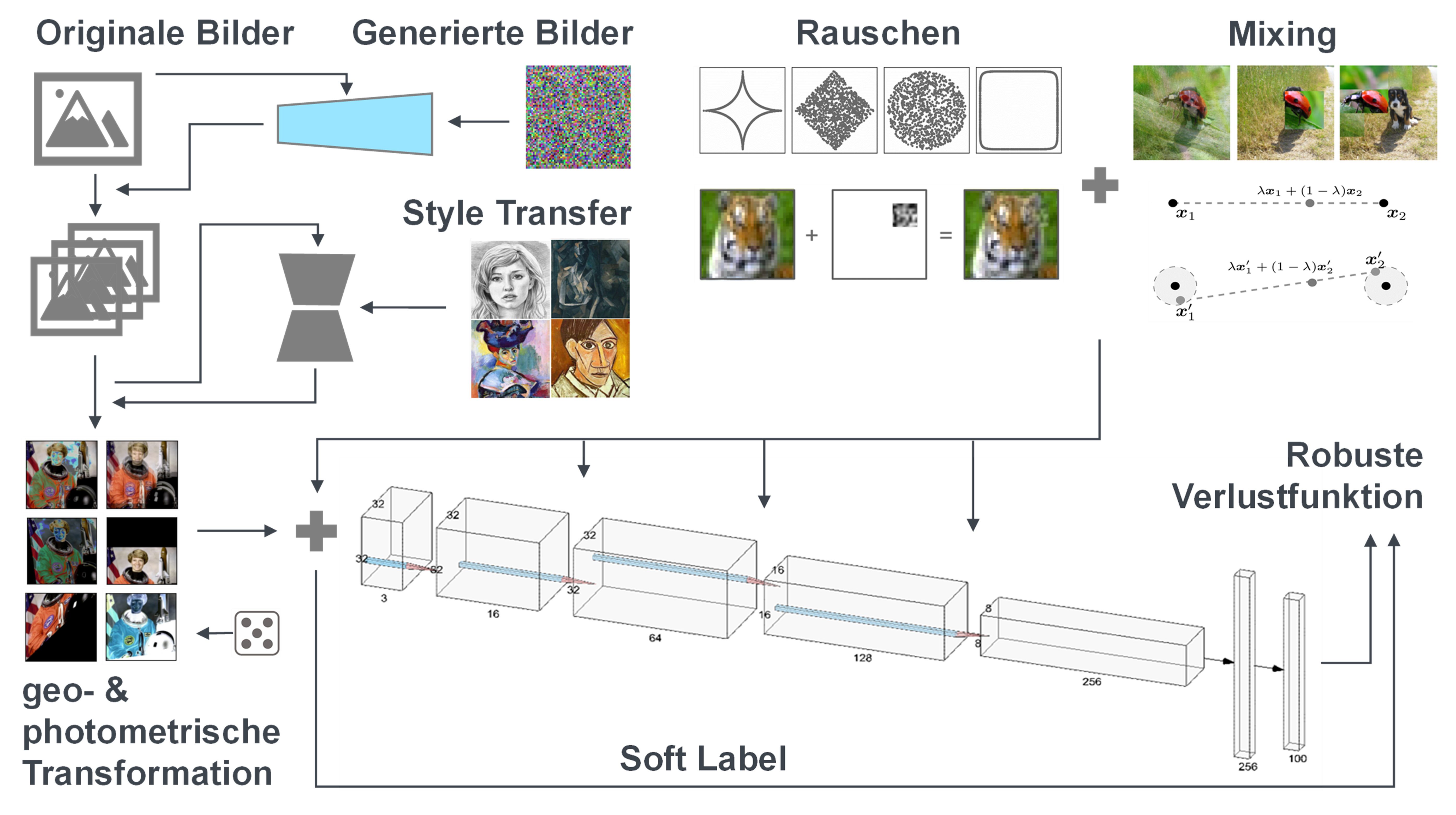

Figure 2: Robust image model training pipeline, featuring various data augmentation techniques to improve corruption robustness.

Another research question is how to comprehensively and expressively evaluate corruption robustness. The goal is to achieve corruption robustness against the entire relevant set of possible corruptions and for meaningful severities. Therefore, different realistic and challenging corruption types and meaningful corruption severities need to be developed and evaluated. The aim of this line of research is to produce reliable and interpretable measures of corruption robustness for given models.

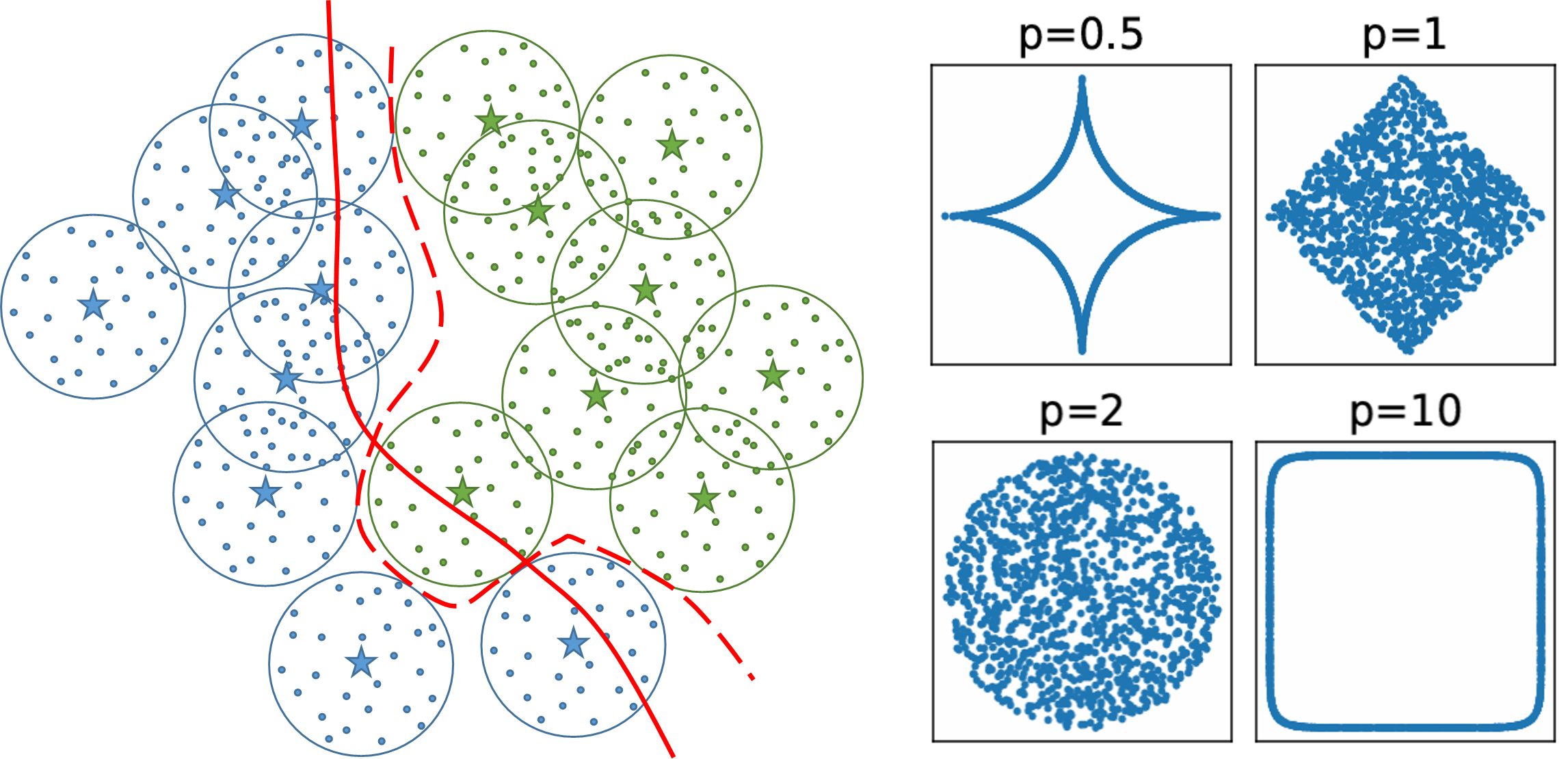

Figure 3: Left: 2D concept of testing statistical robustness with noise centered around the original data points. Right: Examples of random noise distributions for robustness testing.

Our research emphasizes the importance of corruption robustness, which is closely tied to the overall reliability of machine learning models. Below, we provide a selection of our publications exploring this critical area.

Links

- Popular Literature:

- [1]: Benchmarking Neural Network Robustness to Common Corruptions and Perturbations

- On interaction between augmentations and corruptions in natural corruption robustness

- Our References:

- [2]: Utilizing Class Separation Distance for the Evaluation of Corruption Robustness of Machine Learning classifiers

- [3]: Investigating the Corruption Robustness of Image Classifiers with Random p-norm Corruptions